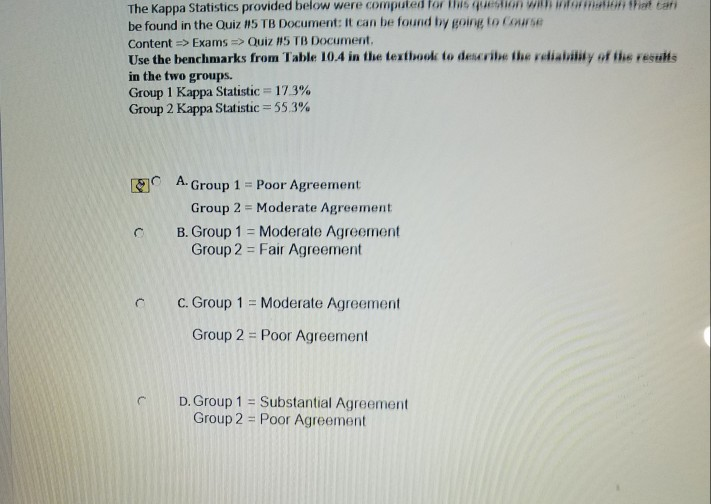

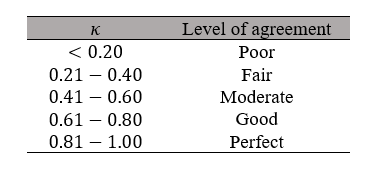

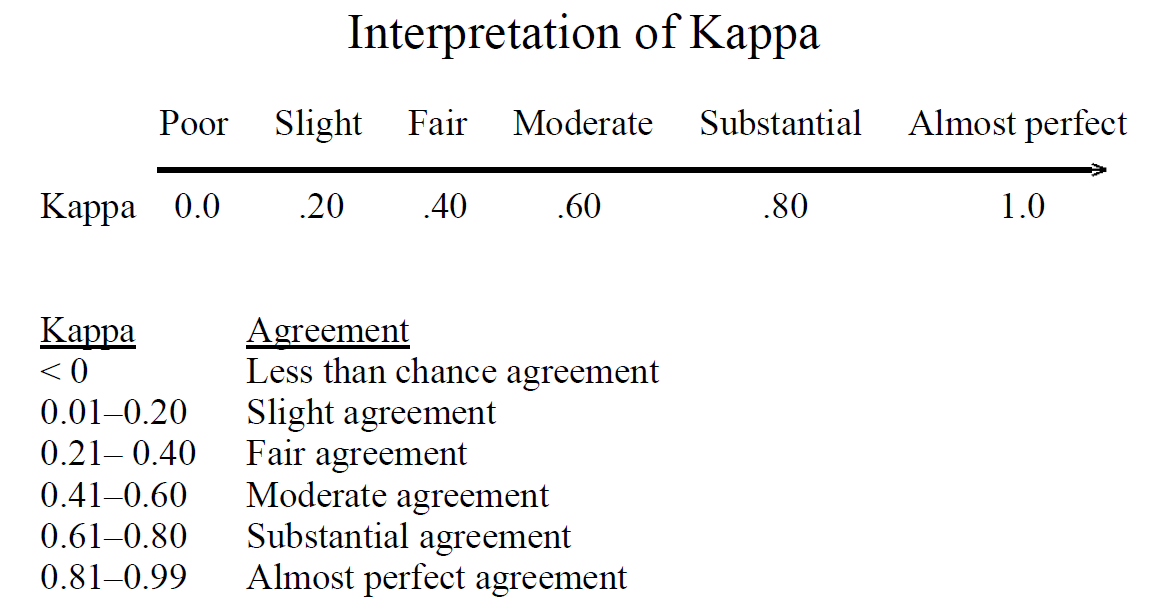

Interpretation of Kappa Values. The kappa statistic is frequently used… | by Yingting Sherry Chen | Towards Data Science

File:Comparison of rubrics for evaluating inter-rater kappa (and intra-class correlation) coefficients.png - Wikimedia Commons

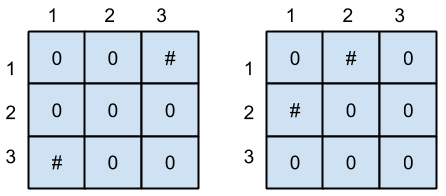

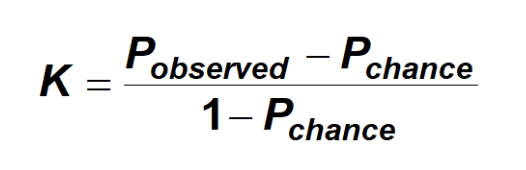

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

The strange of agreement is interpreted considering the kappa coefficient. | Download Scientific Diagram

![Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh](https://datalabbd.com/wp-content/uploads/2019/06/15c-1.png)

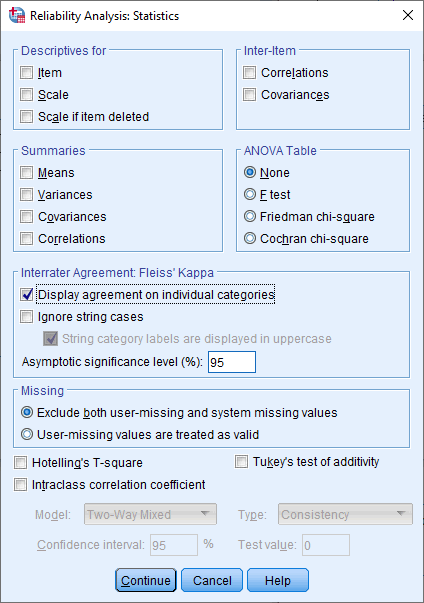

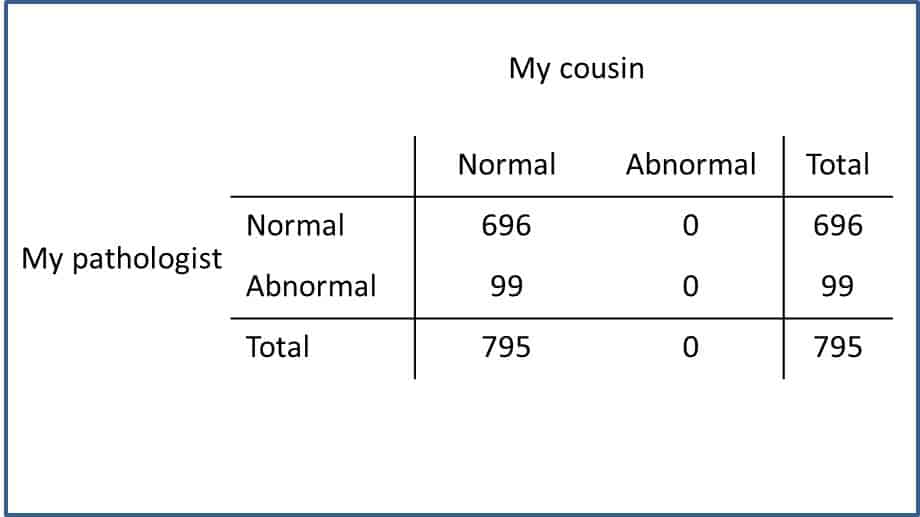

Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh

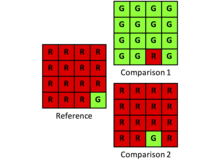

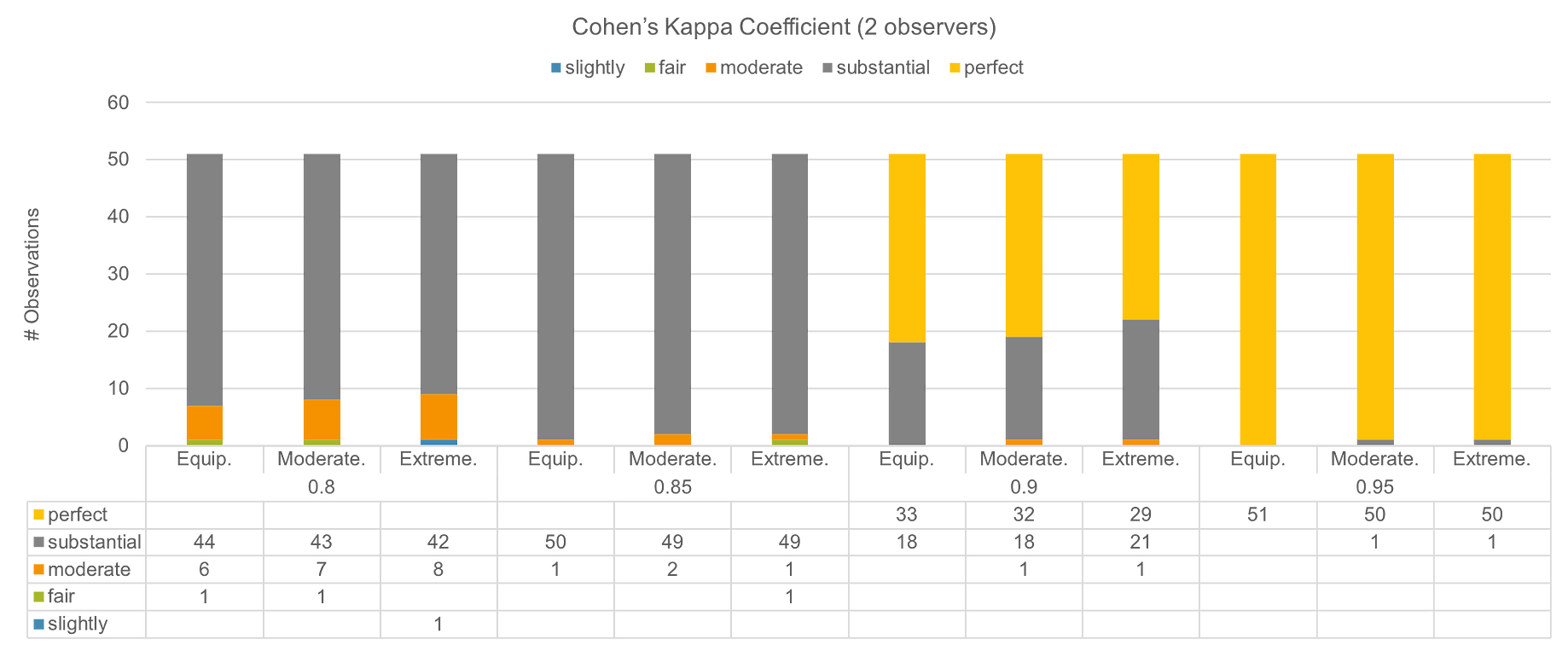

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

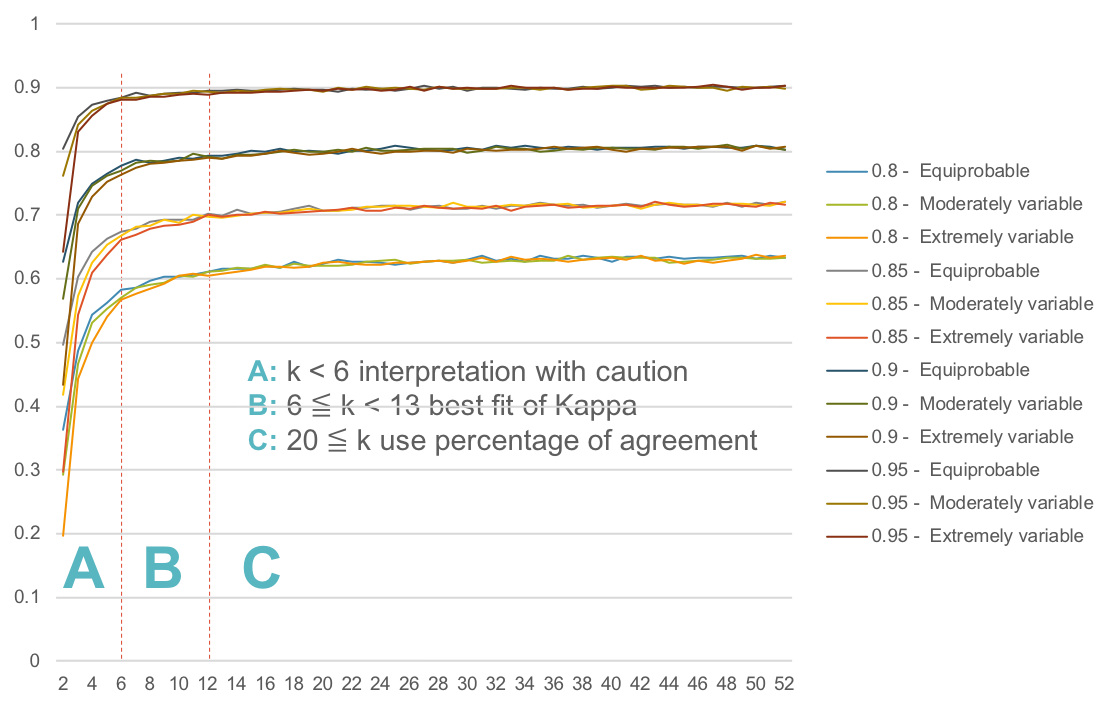

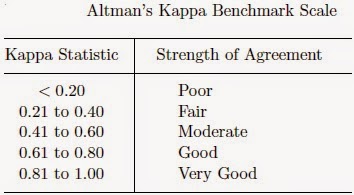

K. Gwet's Inter-Rater Reliability Blog : Benchmarking Agreement CoefficientsInter-rater reliability: Cohen kappa, Gwet AC1/AC2, Krippendorff Alpha

![Kappa Statistics and Strength of Agreement [44]. | Download Scientific Diagram Kappa Statistics and Strength of Agreement [44]. | Download Scientific Diagram](https://www.researchgate.net/publication/340998576/figure/tbl2/AS:888538270797824@1588855440209/Kappa-Statistics-and-Strength-of-Agreement-44.png)